Clustering algorithms play a vital role in data analysis and machine learning, enabling the grouping of data points with similar characteristics. Whether it’s customer segmentation, image processing, or pattern recognition, clustering is an essential tool. Among these, K-Means clustering is one of the most widely used algorithms, thanks to its simplicity and effectiveness. But what sets K-Means apart, and how does it compare to other clustering techniques? In this post, we’ll explore K-Means and more to understand their applications and differences.

Clustering Algorithms: An Overview of K-Means and More

What is K-Means Clustering in Data Analysis?

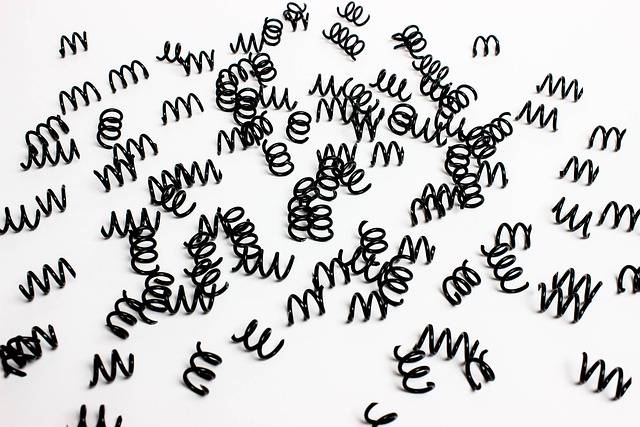

K-Means clustering is a partitioning algorithm that divides data into predefined clusters based on their similarities.

- How K-Means Works:

K-Means begins by initializing cluster centroids randomly. It assigns data points to the nearest centroid, recalculates centroids based on cluster members, and iterates until convergence. This iterative nature ensures that the clusters are well-defined. - Applications of K-Means:

K-Means is extensively used in market segmentation, image compression, and anomaly detection. For instance, businesses use it to group customers based on purchasing behavior, helping in targeted marketing campaigns. - Advantages of K-Means:

K-Means is computationally efficient and works well with large datasets. Its simplicity makes it a go-to choice for many beginners in machine learning. - Limitations of K-Means:

Despite its popularity, K-Means has its challenges. It struggles with non-linear data structures and requires pre-specification of the number of clusters, which might not always be straightforward.

Best Clustering Algorithms for Machine Learning

When it comes to machine learning, K-Means isn’t the only player. Various clustering algorithms are suited to different types of data and tasks.

- Hierarchical Clustering:

Unlike K-Means, hierarchical clustering creates a tree-like structure of clusters. It’s ideal for small datasets where relationships between clusters are important. - DBSCAN (Density-Based Spatial Clustering of Applications with Noise):

DBSCAN focuses on density-based clusters and is effective for identifying irregularly shaped clusters and handling noise in datasets. - Gaussian Mixture Models (GMM):

GMM uses a probabilistic approach to assign data points to clusters. It’s particularly useful for overlapping data where rigid boundaries are unsuitable. - Spectral Clustering:

This algorithm uses graph theory to cluster data. It excels in handling complex datasets with non-linear boundaries.

Applications of Clustering in Machine Learning

Clustering algorithms are versatile and are used across industries:

- Customer Segmentation:

Businesses group customers based on preferences and behaviors to tailor marketing strategies. - Image Processing:

Clustering is used to compress images, detect objects, and even enhance picture quality. - Healthcare:

Patient data clustering helps in disease classification and personalized treatment plans. - Social Network Analysis:

Identifying communities or similar user groups within social platforms relies heavily on clustering algorithms. - Anomaly Detection:

Banks and financial institutions use clustering to detect fraudulent transactions or outliers in data.

Top Clustering Techniques for Unsupervised Learning

Unsupervised learning thrives on clustering techniques to uncover hidden patterns in data.

- Partitional Clustering:

Algorithms like K-Means divide data into exclusive groups, making it suitable for straightforward segmentation. - Fuzzy Clustering:

In fuzzy clustering, data points can belong to multiple clusters with varying probabilities. - Grid-Based Clustering:

This method divides the data space into grids and clusters based on density. It’s often used in spatial data analysis. - Constraint-Based Clustering:

External knowledge or constraints guide the clustering process, ensuring meaningful groupings.

Conclusion

Clustering algorithms, from K-Means to DBSCAN and beyond, are indispensable tools for data analysis and machine learning. Each algorithm has unique strengths and limitations, making it essential to choose the right one based on the dataset and objective. By understanding these techniques, data scientists can unlock valuable insights and drive better decision-making across various domains.